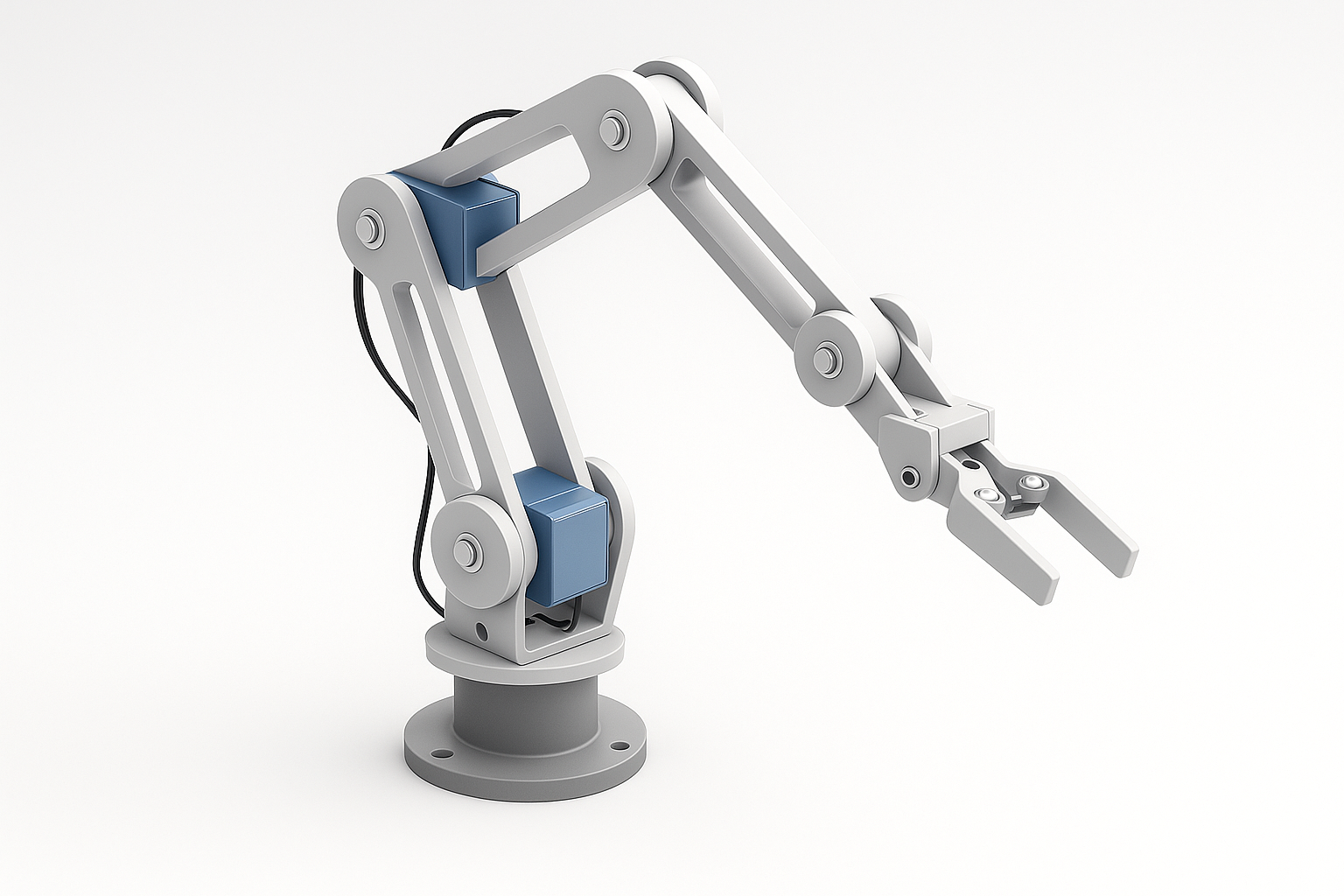

Vision-Guided Pick-and-Place Arm

Low-cost 3-DOF arm with camera-based detection and basic inverse kinematics. I added a fast calibration routine and soft-limit safety to avoid joint overtravel. A Flask dashboard exposes jog controls, teach points, and simple status readouts.

Technologies Used

The Problem

Low-cost manipulation that combines vision, kinematics, and a simple operator UI is hard to do on hobby hardware.

What I Built

A 3-DOF arm that detects targets with a camera, runs basic inverse kinematics, and exposes jog and teach controls in a Flask dashboard. Added soft limits and a quick calibration routine to align camera and arm frames.

Tech Notes

Raspberry Pi, Python, OpenCV (HSV + contour filters), NumPy IK, Flask UI, SG90 servos, two-point coordinate calibration and soft-limit safety.

Current Status

Picks simple colored blocks reliably with repeatable placement.

What's Next

ArUco markers for precise pose, visual servoing, a 4th DOF wrist, and a better gripper.